As a psychology researcher conducting experiments online, it can be challenging to ensure that participants are paying attention and engaging with the material. This is particularly important because inattention can lead to inaccurate results and undermine the validity of the study.

In this article, we put together a few tactics you might try to improve your participants’ engagement with your studies. This will help you get better data, spend less time on data cleaning and increase the chances that you’ll find meaningful results through your research.

We’ll focus on how to improve the attention of participants who are well-intentioned but underperform because of inattention or lack of investment in the task.

In a second article, we’ll also cover ways to reduce intentional cheating by opportunistic participants who might try to earn rewards without properly performing in the experiment.

If you find yourself:

then here are 6 ways to increase your participants’ attention:

A great place to start is the instructions screen. Usually, participants hit a wall of text that outlines the often complicated instructions for the experiment. This can make participants feel exhausted and tempted to skim past the instructions to start the task, often missing important details.

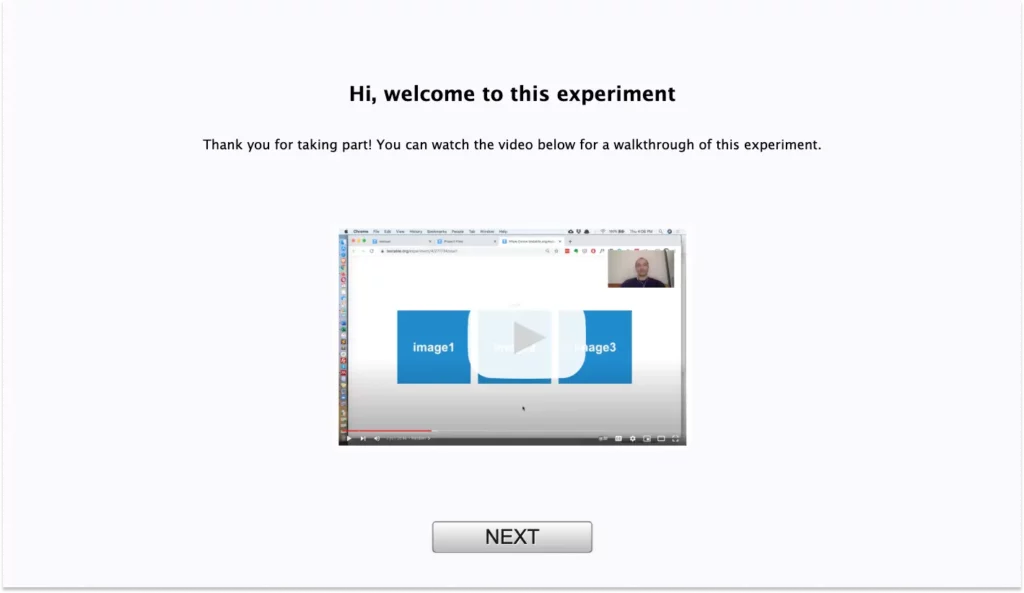

A good way to improve your participants’ experience and make sure they understand what they’ll have to do is to present the instructions as a video instead.

Tools like loom or OBS are free and make it easy to record yourself and your screen as you are explaining your task. It also gives you a chance to connect with your participants more personally. They will care more about the success of your research project and give it their best attention. Just be careful to not unconsciously give away your hypothesis!

On our own platform “Testable Minds” we’ve seen researchers achieve great results with instruction videos. They report a drop in the percentage of data they need to exclude and a much higher overall data signal in more complicated tasks.

Online participants usually expect to receive a monetary reward for their time and effort in taking part in your experiments. The amount will depend on the specific participant pool you are using. On Testable Minds, for example, we enforce a minimum hourly fee (currently $6.50 for regular and $9.00 for verified participants).

We usually recommend increasing the reward if your experiment is particularly demanding. This could mean that your study is unusually long, mentally taxing, or uses stimuli or questions that cause emotional distress. An attractive reward can be a great motivation for participants to keep on going in difficult experiments.

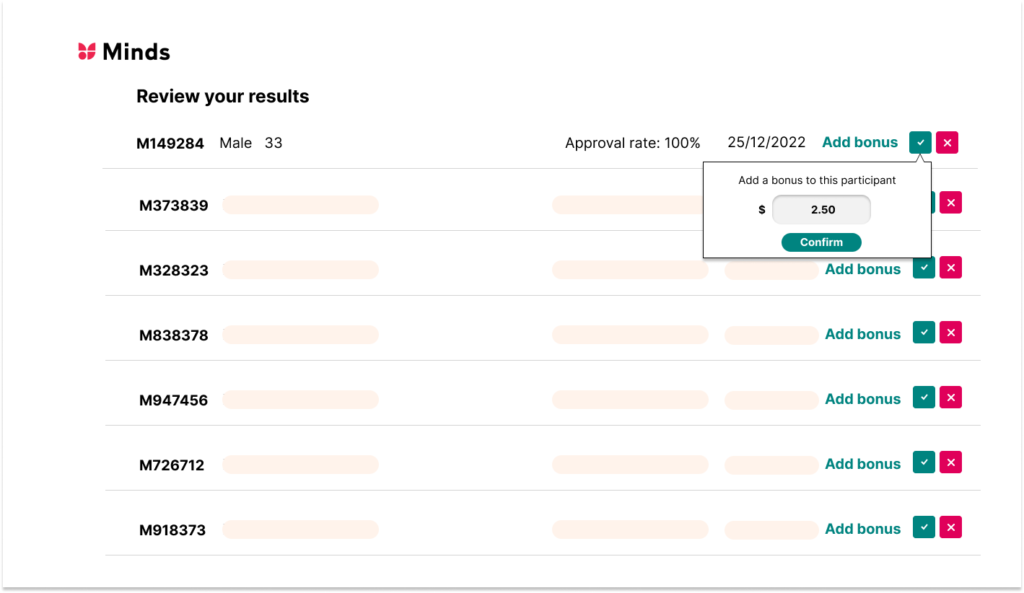

You may also want to advertise a bonus payment that is tied to good performance. On Testable Minds this is easy to do: add bonus payments to participants after reviewing their data. Ideally, the conditions for receiving the bonus are transparent and achievable so participants can work toward it.

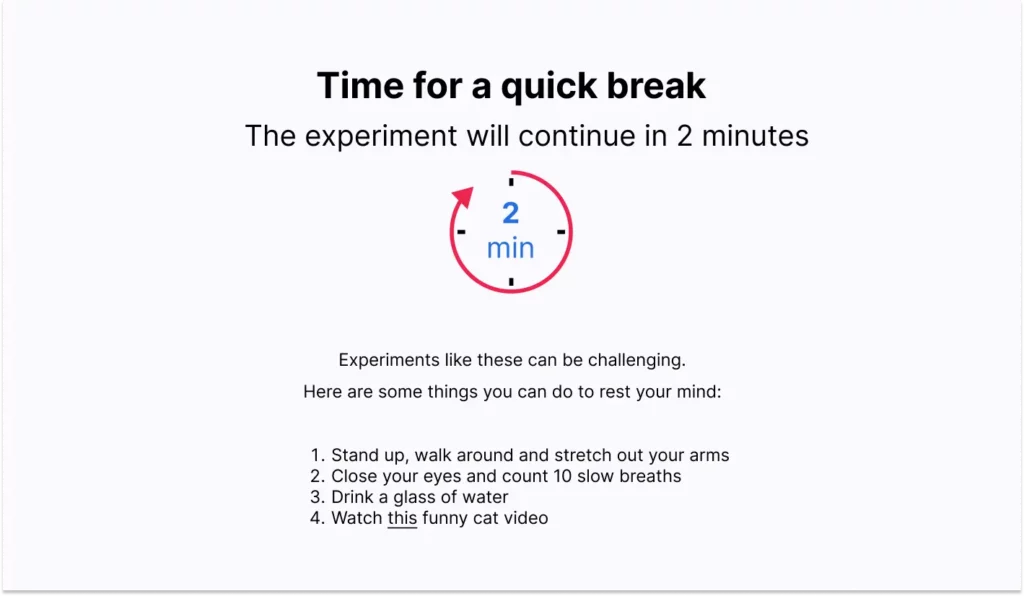

If your experiment is long (more than 15-20 minutes) think about introducing breaks (here is how to implement them in Testable). It might help to give your participants particular behavioral prompts in the breaks that can help them relax such as nudging them to stand up and stretch for a few seconds, or rest their eyes by looking away from the screen. Changing up the types of tasks your participants are doing during a study can also help sustain attention. For example, if your experiment involves a series of cognitive tasks, you could intersperse these tasks with short surveys or self-report measures. This can help to keep participants engaged and prevent them from becoming bored or stressed out.

Consider implementing performance feedback for your participants as a way to boost attention during an online experiment. If your experimental design allows it, it might be a good idea to give trial-by-trial performance feedback about the accuracy and speed of responses. Especially for experiments where fast reaction times are important, it’s a great tactic to push participants to their peak performance.

However, there are other ways of feedback you offer that is less intrusive and works with broader experimental designs.

For example, you can promise that you’ll show participants a final score and tell them how well they have performed compared to a certain benchmark. There are various ways to do this in Testable.

Even more broadly, you can add a few feedback screens throughout the experiment, simply updating the participant on their progress and motivating them to carry on. Something like “You’re already halfway through, well done! We know this is a challenging task and we really appreciate your time and attention. The next part also requires your full focus, so take a breath and press the “Continue” button when you’re ready!”. Goal gradients work!

With online experiments, the same rules of UX and UI apply as for any good, goal-directed website. This means designing the experiment in a user-friendly way and providing clear instructions on how to participate. It is a good idea to “user test” your experiment and make sure that all instructions, buttons, questions, and interactive elements are as clear and unambiguous as possible. If participants don’t have to guess what they need to do, they can dedicate their full attention to the actual experiment.

The context of where you recruit participants for your studies matters. The motivations, incentives, and self-selection of participants will differ from platform to platform, and it will manifest in the overall quality of the data that you are receiving.

Pools like Amazon’s Mechanical Turk are notorious for being overrun with bots. Most of the studies offered on the website are simple surveys or repetitive tasks used to train machine learning models. Participants are generally there to earn money, and in an environment of low rewards and uninteresting tasks, they have the incentive to prioritize the quantity of surveys over the quality of responses.

Dedicated academic pools like Prolific or Testable Minds are different, as they are tailored to the needs of academic researchers. The general quality of studies, the rewards that can be earned, and earning limits ensure that participants’ incentives to deliver quality data are aligned with yours.

Going even further, the Verified Identity feature on Testable Minds, ensures that participants are identified and their demographics guaranteed. This even further increases engagement and reduces the risk of fraud in online research. You can find our research poster on this topic here.

Find out more about our participant pool Testable Minds.